INFORMATION THEORY ENTROPY

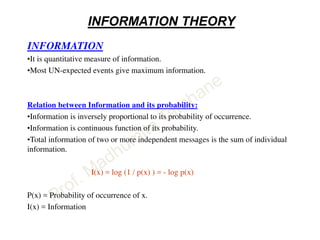

- 1. INFORMATION THEORY INFORMATION •It is quantitative measure of information. •Most UN-expected events give maximum information. Relation between Information and its probability: •Information is inversely proportional to its probability of occurrence. •Information is continuous function of its probability. •Total information of two or more independent messages is the sum of individual information. I(x) = log (1 / p(x) ) = - log p(x) P(x) = Probability of occurrence of x. I(x) = Information

- 2. MARGINAL ENTROPY: Average information Source S delivers messages as X = {x1, x2, x3…xm} with probabilities of p {x} = {p1, p2, p3, ….pm} . • Total N messages are delivered , N→∞. • x1 occurs Np1 times and ….. • Single occurrence of x1 conveys information = -log p1. • Np1 times occurrence conveys information = -Np1 log p1. • Total Information = -Np1 log p1 -Np2 log p2… -Npm log pm • Average information=1/N*(-Np1 log p1 -Np2 log p2… -Np3 logp3…… -N pm log pm ) • H(x) = -p1 log p1-p2 log p2…-pm log pm m • H(x) = -Σpi log pi i =1 • Condition: Complete probability scheme-- Σ pi = 1

- 3. Unit of Information: • I(x) = -log2 p(x) bits • I(x) = -loge p(x) bits = ln p(x) nats • I(x) = -log10 p(x) Hartleys • H(x) -- bits per symbol or bits per message • Information rate R = symbol rate rs * H(x)

- 4. PRACTICE • If all p’s except one are zero, H(x) = 0 • If m symbols have all p’s equal, H(x) = log m • Entropy of binary sources: Symbols are “0” and “1” with probabilities p and (1-p). H(x) = -p log p – (1-p) log (1-p) = say H(p) • X = {x1, x2, x3, x4} with probabilities p = { 1/2, 1/4, 1/8, 1/8 } a. Check if x is a complete probability scheme? b. Find Entropy. c. Find entropy if all messages are equiprobable. d. Find rate of information, if source emits symbols once every milisecond. a. yes. b. 1.75 bits per symbol c. 2 bits per symbol

- 5. 1. A discrete source emits one of the 5 symbols once every millisecond. The symbol probabilities are 1/2, 1/4, 1/8, 1/16/ 1/16 respectively. Find information rate. H(x) = 1.875 bits/symbol, R = 1875 bits / second 2. In the Morse code, dash is represented by a current pulse of 3 unit duration and dot as 1 unit duration. The probability of occurrence of dash is 1/3rd of the probability of occurrence of dot. a. Calculate information content in single dot and dash. b. Calculate average information in dash-dot code. c. Assuming dot and pause between symbols are 1ms each, find average rate of information.

- 6. a. P(dot) = 3/4 , p(dash) = ¼ Idot = -log 3/4 = 0.415 Idash = -log 1/4 = 2 b. H(x) = 0.3113 + 0.5 = 0.8113 c. Average time per symbol = ? • For every dash, 3 dots occur. • Each symbol is succeeded by a pause. • Hence in one set we have— Dot pause dot pause dot pause dash pause Total 10 units. Total 10 ms for 4 symbols. Average time per symbol = 2.5ms. Rate of symbol = 400 bits/symbol R = 400 * 0.8113 = 324.52 bits/s

- 7. Show that entropy of source with equi-probable symbols in always maximum. • H(X) – log m = Σpi log1/ pi – log m • = Σpi log1/ pi – Σpi log m • = Σpi log 1/ (pi * m) • we have ln a ≤ (a-1) ~ property of log • H(X) – log m ≤ Σpi [(1/ (pi * m)) – 1] log2e • ≤ [Σpi (1/ (pi * m)) – Σpi ] log2e • ≤ [Σ 1/m) – Σpi ] log2e • ≤ 0 • H(X) – log m ≤ 0 • H(X) ≤ log m 0 10 20 30 40 50 60 70 80 90 100 0 10 20 30 40 50 60 70 80 90 100

- 8. JOINT ENTROPY • Two sources of information X and Y giving messages x1, x2, x3…xm and y1, y2, y3…yn respectively. • Can be inputs and outputs of a communication system. • Joint event of X and Y considered. • Should have complete probability scheme i.e. sum of all possible combinations of joint event of X and Y should be 1. m n Σ Σ p (xi, yj) = 1 i =1 j =1 • Entropy calculated same as marginal entropy. • Information delivered when one pair (xi, yj) occur once is -log p (xi, yj) . • Number of times this can happen is Nij out of total N. • Information for Nij times for this particular combination is - Nij log p (xi, yj) . • Total information for all possible combinations of I and j is m n - Σ Σ Nij log p (xi, yj) i =1 j =1

- 9. • H (xi, yj) = Total / N p (xi, yj) = Nij / N • JOINT ENTROPY H (xi, yj) = m n H (xi, yj)= - Σ Σ p (xi, yj) log p (xi, yj) i =1 j =1 • ALSO, m Σ p (xi, yj) = p ( yj) i =1 n Σ p (xi, yj) = p ( xi) j =1

- 10. p (xi, yj) = y1 y2 y3 y 4 x1 0.25 0 0 0 x2 0.1 0.3 0 0 x3 0 0.05 0.1 0 x4 0 0 0.05 0.1 x5 0 0 0.05 0 p ( yj) = 0.35 0.35 0.2 0.1 Find p (xi,)

- 11. • H(X) = Average information per character at the source or the entropy of the source. • H(Y) = Average information per character at the destination or the entropy at the receiver. • H(X,Y) = It is average information per pair of transmitted and received character or average uncertainty of communication of communication as a whole. In H(X,Y), X and Y both are uncertain. • H(Y/X) = A specific Xi is transmitted (known). One of permissible yj is received with given probability. This Conditional Entropy is the measure of information about the receiver where it is known that a particular Xi is transmitted. It is the NOISE or ERROR in the channel. • H(X/Y) = A specific Yj is received (known). It may be the result of one of the Xi with a given probability. This Conditional Entropy is the measure of information about the source where it is known that a particular Yj is received. It is the measure of equivocation or how well input content can be recovered from the output.

- 12. CONDITIONAL ENTROPY H(X/Y) • Complete Probability Scheme required – • Bay’s Theorem - p (xi, yj) = p (xi) p (yj /xi) = p (yj) p (xi /yj ) • For a particular Yj received, it can be ONLY from one of x1, x2, x3…xm. • p(x1/yj) + p(x2/yj) + p(x3/yj) …+ p(xm/yj) = 1 m Σ p (xi / yj) = 1 i =1 • Similarly n Σ p (yj / xi) = 1 J =1

- 13. CONDITIONAL ENTROPY H(X/Y) • Yj is received. m • H (X/ yj)= - Σ p (xi/ yj) log p (xi / yj) i =1 • Average conditional entropy is taking all such entropies for all Yj. • No of times H (X/ yj) occurs = no of times Yj occurs = Ny1 • H(X/Y) = 1/N( Ny1* H (X/ y1) + Ny2* H (X/ y2) + Ny3* H (X/ y3) … n • H(X/Y) = Σ p ( yj) H (X/ yj) j =1 m n • H(X/Y) = -Σ Σ p ( yj) p (xi/ yj) log p (xi / yj) i =1 j =1 m n • H(X/Y) = -Σ Σ p (xi,yj) log p (xi / yj) Similarly i =1 j =1 m n • H(Y/X) = -Σ Σ p (xi,yj) log p (yj / xi) i =1 j =1

- 14. PROBLEMS - 1 1. Transmitter has an alphabet consisting of five letters x1, x2, x3, x4 , x5 And receiver has an alphabet consisting of four letters - y1, y2, y3, y4 . Following probabilities are given. y1 y2 y3 y 4 x1 0.25 0 0 0 x2 0.1 0.3 0 0 x3 0 0.05 0.1 0 x4 0 0 0.05 0.1 x5 0 0 0.05 0 Identify the probability scheme and find all entropies.

- 15. Answers HINT: log2X = log10X / log102 = log10X * 3.322 • It is P(X,Y) • H(X) = 2.066 bits / symbol • H(Y) = 1.856 bits / symbol • H(X,Y) = 2.665 bits / symbol • H(X/Y) = 0.809 bits / symbol • H(Y/X) = 0.6 bits / symbol

- 16. RELATION AMONG ENTROPIES H(X,Y) = H(X) + H(Y/X) = H(Y) + H(X/Y) m n • H (xi, yj)= - Σ Σ p (xi, yj) log p (xi, yj) i =1 j =1 Bay’s Theorem - p (xi, yj) = p (xi) p (yj /xi) = p (yj) p (xi /yj ) m n • H (xi, yj)= - Σ Σ p (xi, yj) log p (xi) p (yj /xi) i =1 j =1 • - Σ Σ p (xi, yj) log p (xi) - Σ Σ p (xi, yj) log p (yj /xi) • H(X,Y) = H(X) + H(Y/X ) • Prove the other half.

- 17. H(X) ≥H(X/Y) H(Y) ≥ H(Y/X) • H(X/Y) -H(X) = - Σ Σ p (xi, yj) log p (xi / yj) + Σ p (xi) log p (xi) n as Σ p (xi, yj) = p ( xi) J =1 • = -Σ Σ p (xi, yj) log p (xi / yj) +Σ Σ p (xi, yj) log p (xi) • H(X/Y) -H(X) = Σ Σ p (xi, yj) log {p (xi)/ p (xi /yj)} we have ln a ≤ (a-1) ~ property of log • H(X/Y) -H(X) ≤ Σ Σ p (xi, yj) { {p (xi)/ p (xi /yj)} – 1 } log2e • ≤ [ Σ Σ p (xi, yj) p (xi)/ p (xi /yj) - Σ Σ p (xi, yj) ] log2e • ≤[ Σ Σ p ( yj) p (xi) - Σ Σ p (xi, yj) ] log2e • ≤[1 – 1] log2e • H(X/Y) -H(X) ≤ 0 • Prove H(X,Y) ≤ H(X) + H(Y) 0 10 20 30 40 50 60 70 80 90 100 0 10 20 30 40 50 60 70 80 90 100

- 18. PROBLEMS - 2 • Identify the following probability scheme and find all entropies. • Given P(x) = [ 0.3 0.4 0.3] y1 y2 y3x1 x1 x1 3/5 1/5 1/5 1/5 1/5 1/5 1/5 3/5 3/5

- 19. PROBLEMS - 3 • Given p(x) = [ 0.6 0.3 0.1] . Find all entropies. y1 y2 y3x3 x2 x1 1-p 1-p p p

- 20. PROBLEMS - 40.80.1 0.1 0.10.8 0.1 p(x) =( 0.3 0.2 0.5) 0.10.1 0.8 Find all entropies. PROBLEMS – 6 3/40 1/40 1/40 1/20 3/20 1/20 p(x) = 1/4 12/40 18/40 1/8 1/8 3/8 Find all entropies.

- 21. Show that in discrete noise free channel both the conditional entropies are zero. • In discrete noise free channel, x1 is transmitted- ONLY y1 is received with complete probability….. y1 y2 ynxm x2 x1 P(xm,yn) P(x2,y2) P(x1,y1) . . . Show that H(X/Y) and H(Y/X) are zero.

- 22. Entropy for discrete channel with independent input-output. • No correlation between input and output. • If x1 is transmitted, any of y’s can be received with equal probability. • X1 transmitted, probability of occurrence of y1, y2, …yn are equal. • Does not convey any information. Not desired. p1 p1 p1 …p1 p1 p2 p3 …pn p2 p2 p2 …p2 OR p1 p2 p3 …pn … … pm pm pm ..pm p1 p2 p3 …pn m n • Σ Σ p(x,y) = 1 In this case p(x,y) = p(x) * p(y) i =1 j =1 m • Σ pi = 1/n i =1

- 23. np1 p(x) = np2 np3 . npm P(y) = 1/n 1/n 1/n….. /n Find all other probabilities and entropies and show that H(X) = HX/Y). Try the other case too.

- 24. MUTUAL INFORMATION • It is the information gain through the channel. • xi is transmitted with a probability of p(xi). Priori entropy of X. • Initial uncertainty of xi is –log p(xi). • A yj is received. Is it due to transmission of xi ? • Final uncertainty of transmission of xi is –log p(xi / yj). • It is Posteriori entropy of X. • Information gain = net reduction in the uncertainties. • I(xi , yj) = –log p(xi) + log p(xi / yj) • I(xi , yj) = log {p(xi / yj) / p(xi) } • I(X,Y) = averaging I(xi , yj) for all values of I and j. m n • I(X,Y) = Σ Σ p (xi , yj) log{p(xi / yj) / p(xi) } i =1 j =1 • I(X,Y) = H(X) – H(X/Y) I(X,Y) = H(Y) – H(Y/X) = H(X) + H(Y) – H(X,Y)

- 25. CHANNEL CAPACITY ( in terms of Entropy) • It is maximum of Mutual Information that may be transmitted through the channel. • Can we minimize H(Y/X) and H(X/Y) ? • NO. They are the properties of channel. • Can we maximize H(X) or H(Y) ? • YES. If all the messages are equi- probable!! • C = I(X,Y) max. • C = I(X,Y) max = H(X) max – H(X/Y) EFFICIENCY • η = I(X,Y) / C

- 26. ASSIGNMENT 1. Find mutual information for noiseless channel. 2. Given p(x) = 1/4, 2/5, 3/20, 3/20, 1/20 Find efficiency for following probability matrix – 1/4 0 0 0 1/10 3/10 0 0 0 1/20 1/10 0 0 0 1/20 1/10 0 0 1/20 0 3. 2/3 1/3 1/3 2/3 If p(x1) = ¾ and p(x2) = ¼, find H(X), H(Y), H(X/Y), H(Y/X), I(X,Y), C and efficiency.

- 27. ASSIGNMENT 4. a. For a given channel find mutual information I(X,Y) with p(x) = {½, ½} y1 y2 y3 x1 2/3 1/3 0 x2 0 1/6 5/6 b. If receiver decides that x1 is the most likely transmitted symbol when y2 is received, other conditional probabilities remaining same, then calculate I(X,Y) HINT: b) Find p(X/Y). Change p(x1/y2) to 1 and p(x2/y1) to 0. Rest remains same. Calculate I.

- 28. Types of Channels – Symmetric channel • Every row of the channel matrix contains same set of numbers p1 to pJ. • Every column of the channel matrix contains same set of numbers q1 to qJ. 0.2 0.2 0.3 0.3 0.3 0.3 0.2 0.2 0.2 0.3 0.5 0.3 0.5 0.2 0.5 0.2 0.3

- 29. Types of Channels – Symmetric channel • Binary symmetric channel – special case. • 0 and 1 transmitted and received. • ϵ is probability of error. 1-ϵ ϵ 0 ≤ ϵ ≤ 1 ϵ 1-ϵ • H(Y/X) is independent of input distribution and solely determined by channel matrix m n • H(Y/X) = -Σ Σ p (xi,yj) log p (yj / xi) i =1 j =1

- 30. Types of Channels – Symmetric channel m n • H(Y/X) = -Σ Σ p (xi,yj) log p (yj / xi) i =1 j =1 • H(Y/X) = -Σi p(xi) Σj p(yj / xi) log p (yj / xi) • (Check with given matrix.) p(x1){ (1-ϵ) log(1-ϵ)+ ϵ logϵ} + p(x2){ (1-ϵ)log(1-ϵ)+ ϵlog ϵ} • Generalizing— • H(Y/X) = Σj p(yj / xi) log p (yj / xi) • H(Y/X) is independent of input distribution and solely determined by channel matrix

- 31. Types of Channels – Lossless channel • Output uniquely specifies the input. • H(X/Y) = 0 Noise less channel • Matrix has one and only one non-zero element in each column. • Channel with error probability 0 as well as 1 are noiseless channels. • P(Y/X) = X1 X2 X3 Y1 Y2 Y3 Y4 Y5 Y6 ½ ½ 0 0 0 0 0 0 3/5 3/10 1/10 0 0 0 0 0 0 1

- 32. Types of Channels – Deterministic channel • Input uniquely specifies the output. • H(Y/X) = 0 • Matrix has one and only one non-zero element in each row. • Also Sum in row should be 1. • Elements are either 0 or 1. • P(Y/X) = Y1 Y2 Y3 X1 X6 1 0 0 1 0 0 0 1 0 0 1 0 0 1 0 0 0 1

- 33. Types of Channels – Useless channel • X and Y are independent for all input distributions. • H(X/Y) = H(X) • Proof of sufficiency:- • Assuming channel matrix has identical rows. Source is NOT equi- probable. • For every output p(yj)– m p(yj) = Σ i =1 p (xi, yj) = Σi p (xi) p(yj /xi) = p(yj /xi) Σi p (xi) = p(yj /xi) • Also p (xi, yj) = p (xi) p( yj) …X and Y are independent

- 34. Types of Channels – Useless channel • Proof of necessity:- • Assuming rows of channel matrix are NOT identical. Source is equi- probable. • Column elements are not identical. • Let p(yj0 /xi0) is largest element in jth row. m p(yj) = Σ i =1 p (xi, yj) = Σi p (xi) p(yj /xi) = 1/M * (Σi p(yj /xi) < p(yj0 /xi0) • Generalizing - p(yj) ≠ p(yj0 /xi0) • p (xi, yj) = p (xi) p(yj /xi) ≠ p (xi) p( yj) • Hence for uniform distribution of X, X and Y are not independent. Channel in not useless.

- 35. DESCRETE COMMUNICATION CHANNELS – BINARY SYMMETRIC CHANNEL (BSC) • One of the most widely used channel. • Symmetric means – p11 p22 p21 p12 11 00 P11 = p22 P12 = p21

- 36. • q is probability of correct reception . • p is probability of wrong reception. • We have to find C, channel capacity. • p(0) = p(1) = 0.5 • As p(X) is given, assume above is p(Y/X). • Find p(X,Y) and p(Y). • Find C = {H(Y) – H(Y/X)}max • p(X,Y) = p/2 q/2 q/2 p/2 • C = 1+ p log p +q log q q q p p 11 00 p + q = 1

- 37. BINARY ERASE CHANNEL (BEC) • ARQ is common practice in Communication. • Assuming x1 is not received as y2 and vice versa. • Y3 output to be erased and ARQ to be asked. • C is to be found. p(X) = 0.5, 0.5 • p(X,Y) = q 0 p • 0 q p q q p p y2x2 y1x1 y3

- 38. • p(X,Y) = q/2 0 p/2 0 q/2 p/2 • p(Y) = q/2 q/2 p • p(X/Y) = 1 0 ½ 0 1 ½ • C = H(X) – H(X/Y) = 1-p = q • C = q = Prob of correct reception.

- 39. CASCADED CHANNELS • Two BSC’s are cascaded. q q p p z21 z10 q q p p y2x2 y1x1 q’ q’ p’ p’ 11 00 Equivalent can be drawn as -- Z1 Z2 p(Z/S) = X1 q’ p’ X2 p’ q’

- 40. • q’ = p2 + q2 = 1- 2pq • p’ = 2pq • Find C. • C = 1- p’ log p’ + q’ log q’ • C = 1+ 2pq log (2pq) + (1-2pq) log (1-2pq) • Calculate C for 3 stages in cascaded.

- 41. Repetition of signals • To improve channel efficiency and to reduce error, it is a useful technique to repeat signals. • CASE I: Instead of 0 and 1, we send 00 and 11. • CASE II: Instead of 0 and 1, we send 000 and 111. • Case I : At receiver, 00 and 11 are valid outputs. Rest combinations are erased. • Case II : At receiver, 000 and 111 are valid outputs. Rest combinations are erased. CASE I: 00 11 X Y4 00 11 01 10 q2 q2 pq p2 pq p2 Y3 Y2 Y1

- 42. • y1 y2 y3 y4 p(Y/X) = X1 q2 p2 pq pq X2 p2 q2 pq pq • Find C. • C = (p2 + q2) * [ 1 + q2 / (p2 + q2) log { q2 / (p2 + q2) } + p2 / (p2 + q2) log { p2 / (p2 + q2) } ] • C = (p2 + q2) * [ 1 –H(p2 / (p2 + q2))] • All above CAN NOT be used for non symmetric channels

- 43. PROBLEMS - Assignment • Assume a BSC with q = 3/4 and p = 1/4 and p(0) = p(1) = 0.5 . a. Calculate the improvement in channel capacity after 2 repetition of inputs. b. Calculate the improvement in channel capacity after 3 repetition of inputs. c. Calculate the change in channel capacity after 2 such BSC’s are cascaded. d. Calculate the change in channel capacity after 3 such BSC’s are cascaded.

- 44. Channel Capacity of non-symmetric channels • [ P] [ Q] = - [ H] auxiliary variables Q1 and Q2 P11 P12 P21 P22 PY/X = P11 P12 P21 P22 Q1 Q2 = P11 logP11 + P12 logP12 P21 logP21 + P22 LogP22 Matrix Operation Then C = log ( 2Q1 + 2Q2 )

- 45. Channel Capacity of non-symmetric channels • Find channel capacity of • 0.4 0.6 0 • 0.5 0 0.5 • 0 0.6 0.4 • C = 0.58 bits

- 46. EXTENSION OF ZERO-MEMORY SOURCE • Binary alphabets can be extended to s2 to give 4 words, 00, 01, 10, 11. • Binary alphabets can be extended to s3 to give 8 words, 000, 001, 010, 011, 100, 101, 110, 111. • For m messages with m=2n, an nth extension of the binary is required.

- 47. EXTENSION OF ZERO-MEMORY SOURCE • Zero memory source s has alphabets {x1, x2,…xm.}. • Then nth extension of S called Sn is zero memory source with m’ symbols {y1, y2,…ym’.}. • y(j) = {xj1, xj2,…xjn }. • p(y(j)) = {p(xj1) p(xj2 )…p(xjn )}. • H(Sn) = nH(S) • Prove

- 48. H(Sn) = nH(S) • Zero memory source S has alphabets {x1, x2,…xm.} with probability of xi as pi. • nth extension of S called Sn is zero memory source with m’ = mn symbols {y1, y2,…y m’ }. • y(j) = {xj1, xj2,…xjn }. • p(y(j)) = {p(xj1) p(xj2 )…p(xjn )}. • ∑ Sn p(y(j)) = ∑ Sn {p(xj1) p(xj2 )…p(xjn )}. • =∑j1 ∑j2 ∑j3… p(xj1) p(xj2 )…p(xjn ) • =∑j1 p(xj1)∑j2 p(xj2 )∑j3… …p(xjn ) • = 1 Using this.. • H(Sn) = -∑ Sn p(y(j)) log p(y(j))

- 49. H(Sn) = nH(S) • H(Sn) = -∑ Sn p(y(j)) log {p(xj1) p(xj2 )…p(xjn )} • = -∑ Sn p(y(j)) log p(xj1) -∑ Sn p(y(j)) log p(xj2 )…n times • Here each term.. • -∑ Sn p(y(j)) log p(xj1) • = -∑ Sn {p(xj1) p(xj2 )…p(xjn )} log p(xj1) • = -∑j1 p(xj1)∑j2 p(xj2 )∑j3… …p(xjn ) log p(xj1) • = -∑j1 p(xj1) log p(xj1) ∑j2 p(xj2 )∑j3p(xj2 ) … • = -∑s p(xj1) log p(xj1) = H(S) • H(Sn) = -∑s p(xj1) log p(xj1) … n times • H(Sn) = n H(S)

- 50. EXTENSION OF ZERO-MEMORY SOURCE • Problem 1: Find entropy of third extension S3 of a binary source with p(0) = 0.25 and p(1) = 0.75. Show that extended source satisfies complete probability scheme and its entropy is three times primary entropy. • Problem 2: Consider a source with alphabets x1, x2 , x3, x4 with probabilities p{xi} ={ ½, ¼, 1/8, 1/8}. Source is extended to deliver messages with two symbols. Find the new alphabets and their probabilities. Show that extended source satisfies complete probability scheme and its entropy is twice the primary entropy.

- 51. EXTENSION OF ZERO-MEMORY SOURCE • Problem 1: 8 combinations • H(S) = 0.815 bits/symbol H(S3) = 2.44 bits/symbol • Problem 2: 16 combinations • H(S) = 1.75 bits/symbol H(S2) = 3.5 bits/symbol

- 52. EXTENSION OF BINARY CHANNELS • BSC • Channel matrix for second extension of channel – Y 00 01 10 11 p(Y/X) = X 00 q2 pq pq p2 01 pq q2 p2 pq 10 pq p2 q2 pq 11 p2 pq pq q2 For C – x(i) = {0.5, 0.5}. Un-extended For C – y(j) = {0.25, 0.25, 0.25, 0.25}. Extended q q p p 11 00

- 53. EXTENSION OF BINARY CHANNELS • Show that • I(X2, Y2) = 2 I(X, Y) • = 2(1+ q log q + p log p)

- 54. CASCADED CHANNELS • Show that • I(X, Z) = 1-H(2pq) for 2 cascaded BSC, – I(X,Y) = 1- H(p) – if H(p) = -(p log p +q log q) • I(X, U) = 1-H(3pq2+p3)) for 3 cascaded BSC, – I(X,Y) = 1- H(p) – if H(p) = -(p log p +q log q)

- 55. SHANNON’S THEOREM • Given a source of M equally likely messages. • M >> 1. • Source generating information at a rate R. • Given a channel with a channel capacity C, then • If R < = C, then there exists a coding technique such that messages can be transmitted and received at the receiver with arbitrarily small probability of error.

- 56. NEGATIVE STATEMENT TO SHANNON’S THEOREM • Given a source of M equally likely messages. • M >> 1. • Source generating information at a rate R. • Given a channel with a channel capacity C, then • If R > C, then probability of error is close to unity for every possible set of M transmitter messages. • What is this CHANNEL CAPACITY?

- 57. CAPACITY OF GAUSSIAN CHANNEL DESCRETE SIGNAL CONTINEOUS CHANNEL • Channel capacity of white band limited Gaussian channel is C = B log2[ 1 + S/N ] bits/s • B – Channel bandwidth • S – Signal power • N – Noise power within channel bandwidth • ALSO N = η B where η / 2 is two sided noise power spectral density.

- 58. • Formula is for Gaussian channel. • Can be proved for any general physical system assuming- 1. Channels encountered in physical system are generally approximately Gaussian. 2. The result obtained for a Gaussian channel often provides a lower bound on the performance of a system operating over a non Gaussian channel.

- 59. PROOF- • Source generating messages in form of fixed voltage levels. • Level height is λσ. RMS value of noise is σ. λ is a large enough constant. -3λσ/2 -λσ/2 λσ/2 3λσ/2 -5λσ/2 5λσ/2 ־ ־ ־ ־ ־ ־ t s(t) T

- 60. • M possible types of messages. • M levels. • Assuming all messages are equally likely. • Average signal power is S = 2/M { (λσ/2 )2 + (3λσ/2 )2 + (5λσ/2 )2 + … ( [M-1] λσ/2 )2 } • S = 2/M * (λσ/2 )2 { 1+ 3 2 + 52 + … [M-1]2 } • S = 2/M * (λσ/2 )2 {M (M2 - 1)} / 6 Hint: Σ N2 = 1/6*N*(N+1)*(2N+1) • S = (M2-1)/12 * (λσ )2 • M =[ ( 1+ 12 S / (λσ )2 ]1/2 N = σ 2 • M =[ ( 1+ 12 S / λ2 N ]1/2

- 61. • M equally likely messages. • H = log2 M • H = log2 [ 1+ 12 S / λ2 N ]1/2 Let λ2 =12 • H = ½ * log2 [ 1+ S / N ] • Square signals have rise time and fall time through channel. • Rise time tr = 0.5/B = 1/(2B) • Signal will be detected correctly if T is at least equal to tr . • T = 1/(2B) • Message rate r = 1/T = 2B messages/s • C = Rmax = 2B * H • C = Rmax = 2B * ½ * log2 [ 1+ S / N ] • C = B log2 [1+ S / N ]

- 62. SHANNON’s LIMIT • In an ideal noiseless system, N = 0 S/N → ∞ With B → ∞, C → ∞. • In a practical system, N can not be 0. – As B increases , initially S and N both will increase. C increases with B – Later, increase in S gets insignificant while N gradually increases. Increase in C with B gradually reduces . – C reaches a finite upper bound as B → ∞. – It is called Shannon’s limit.

- 63. SHANNON’s LIMIT • Gaussian channel has noise spectral density - η/2 • N = η/2 * 2B = ηB • C = B log2 [ 1+ S / ηB] • C = (S / η ) (η / S) B log2 [ 1+ S / ηB] • C = (S / η ) log2 [ 1+ S / ηB] (ηB / S) Lim x → 0 ( 1+X ) 1/X = e X = S / ηB • At Shannon’s limit, B → ∞, S / ηB → 0 • C ∞ = (S / η ) log2 e • C ∞ = 1.44 (S / η )

- 64. CHANNELS WITH FINITE MEMORY • Statistically independent sequence: Occurrence of a particular symbol during a symbol interval does NOT alter the probability of occurrence of symbol during any other interval. • Most of practical sources are statistically dependant. • Source emitting English symbol : – After Q next letter is U with 100% probability – After a consonant, probability of occurrence of vowel is more. And vise versa. • Statistical dependence reduces amount of information coming out of source.

- 65. CHANNELS WITH FINITE MEMORY Statistically dependent source. • A source emitting symbols at every interval of T. • Symbol can have any value from x1, x2, x3…xm. • Positions are s1, s2, s3…sk-1 ,sk • Each position can take any of possible x. • Probability of occurrence of xi at position sk will depend on symbols at previous (k-1) positions. • Such sources are called MARKOV’s SOURCE of (k- 1) order. • Conditional Probability = » p (xi / s1, s2, s3…sk-1 ) • Behavior of Markov source can be predicted from state diagram.

- 66. SECOND ORDER MARKOV SOURCE- STATE DIAGRAM • SECOND ORDER MARKOV SOURCE- Next symbol depends on 2 previous symbols. • Let m = 2 ( 0, 1) • No of states = 22 = 4. • A – 00 • B – 01 • C – 10 • D - 11 • Given p(xi / s1, s2 ) • p(0 /00 ) = p(1 /11 ) = 0.6 • p(1 /00 ) = p(0 /11 ) = 0.4 • p(1 /10 ) = p(0 /01 ) = 0.5 • p(0 /10 ) = p(1 /01 ) = 0.5 • MEMORY = 2. 00 0110 11 0.4 0.6 0.6 0.5 0.5 0.40.5 0.5

- 67. • State Equations are: • p(A) = 0.6 p(A) + 0.5p(C) • p(B) = 0.4 p(A) + 0.5p(C) • p(C) = 0.5 p(B) + 0.4p(D) • p(D) = 0.5 p(B) + 0.6p(D) • p(A)+ p(B)+ p(C)+ p(D)=1 • Find p(A), p(B), p(C), p(D) • p(A) = p(D) = 5/18 • p(B) = p(C) = 2/9 00 0110 11 0.4 0.6 0.6 0.5 0.5 0.40.5 0.5 D C B A

- 68. s1s2 xi p(xi /s1s2) p(s1s2) p(s1s2 xi) 000 0.6 5/18 1/6 001 0.4 5/18 1/9 010 0.5 2/9 1/9 011 0.5 2/9 1/9 100 0.5 2/9 1/9 101 0.5 2/9 1/9 110 0.4 5/18 1/9 111 0.6 5/18 1/6

- 69. Find Entropies. • H(s1s2) = ? (only 4 combinations) • = 2 * 5/18 * log2 18/5 + 2 * 2/9 * log2 9/2 • H(s1s2) = 2 • H(s1s2 xi) = ? • = 2 * 1/6 * log2 6 + 6 * 1/9 * log2 9 • H(s1s2 xi) = 2.97 • H(xi /s1s2) = ? • H(xi /s1s2) = 2.97 - 2 • H(xi /s1s2) = 0.97

- 70. PROBLEMS • Identify the states and find all entropies. BA 2/3 2/3 1/3 1/3

- 71. PROBLEMS • Identify the states and find all entropies. 4 32 1 7/8 1/8 1/8 3/4 3/4 7/81/4 1/4 D C B A

- 72. CONTINUOUS COMMUNICATION CHANNELS Continuous channels with continuous noise • Signals like video and telemetry are analog. • Modulation techniques like AM, FM, PM etc. are continuous or analog. • Channel noise is white Gaussian noise. • Required to find rate of transmission of information when analog signals are contaminated with continuous noise.

- 73. CONTINUOUS COMMUNICATION CHANNELS ∞ • H(X) = - ∫∞ p(x) log 2 p(x) dx where ∞ • ∫∞ p(x) dx = 1 CPS ∞ • H(Y) = - ∫∞ p(y) log 2 p(y) dy ∞ ∞ • H(X,Y) = - ∫∞ ∫∞ p(x,y) log 2 p(x,y) dx dy

- 74. ∞ ∞ • H(X/Y) = - ∫∞ ∫∞ p(x,y) log 2 p(x/y) dx dy ∞ ∞ • H(Y/X) = - ∫∞ ∫∞ p(x,y) log 2 p(y/x) dx dy • I(X,Y) = H(X) – H(X/Y)

- 75. PROBLEMS • Find entropy of f(x) = bx2 for 0 <= x <= a = 0 elsewhere. Check whether above function is complete probability scheme. If not, find value of b to make it one. • For CPS ∞ ∫∞ f(x) dx = 1 limit from 0 to a. • a3b/3 = 1 B = 3/a3 H(X) = 2/3 + ln(a/3) Using standard solution

- 76. PROBLEMS • Find H(X), H(Y/X) and I(X,Y) when f (x) = x(4-3X) 0<=x<=1 f(y/x) = 64(2-x-y)/(4-3x) 0<=y<=1 • Find H(X), H(Y/X) and I(X,Y) when f (x) = e-x 0<=x<=∞ f(y/x) = x e-xy 0<=y<= ∞

- 77. BOOKS 1.Information and coding – By-N. Abramson 2. Introduction to Information theory – By-M. Mansurpur 3. Information Theory – By-R. B. Ash 4. Error Control Coding – By-Shu Lin and D. J. Costello 5. Digital and Analog Communication systems By-Sam Shanmugham 6. . Principles of Digital Communications By-Das, Mullick and Chatterjee