TF(Term Frequency)-IDF(Inverse Document Frequency) from scratch in python .

In This Article I will explain how to implement tf-idf technique in python from scratch , this technique is used to find meaning of sentences consisting of words and cancels out the incapabilities of Bag of Words technique which is good for text classification or for helping a machine read words in numbers.

Table of Contents:

- Terminology .

- Term Frequency(TF) .

- Document Frequency .

- Inverse Document Frequency .

- Implementation in Python .

1 - Terminology :

- t — term (word)

- d — document (set of words)

- N — count of corpus

- corpus — the total document set

2 -Term Frequency (TF):

Suppose we have a set of English text documents and wish to rank which document is most relevant to the query , “Data Science is awesome !” A simple way to start out is by eliminating documents that do not contain all three words “Data”,”is”, “Science”, and “awesome”, but this still leaves many documents. To further distinguish them, we might count the number of times each term occurs in each document; the number of times a term occurs in a document is called its term frequency.

T

he weight of a term that occurs in a document is simply proportional to the term frequency.

Formula :

tf(t,d) = count of t in d / number of words in d

3 -Document Frequency :

This measures the importance of document in whole set of corpus, this is very similar to TF. The only difference is that TF is frequency counter for a term t in document d, where as DF is the count of occurrences of term t in the document set N. In other words, DF is the number of documents in which the word is present. We consider one occurrence if the term consists in the document at least once, we do not need to know the number of times the term is present.

df(t) = occurrence of t in documents

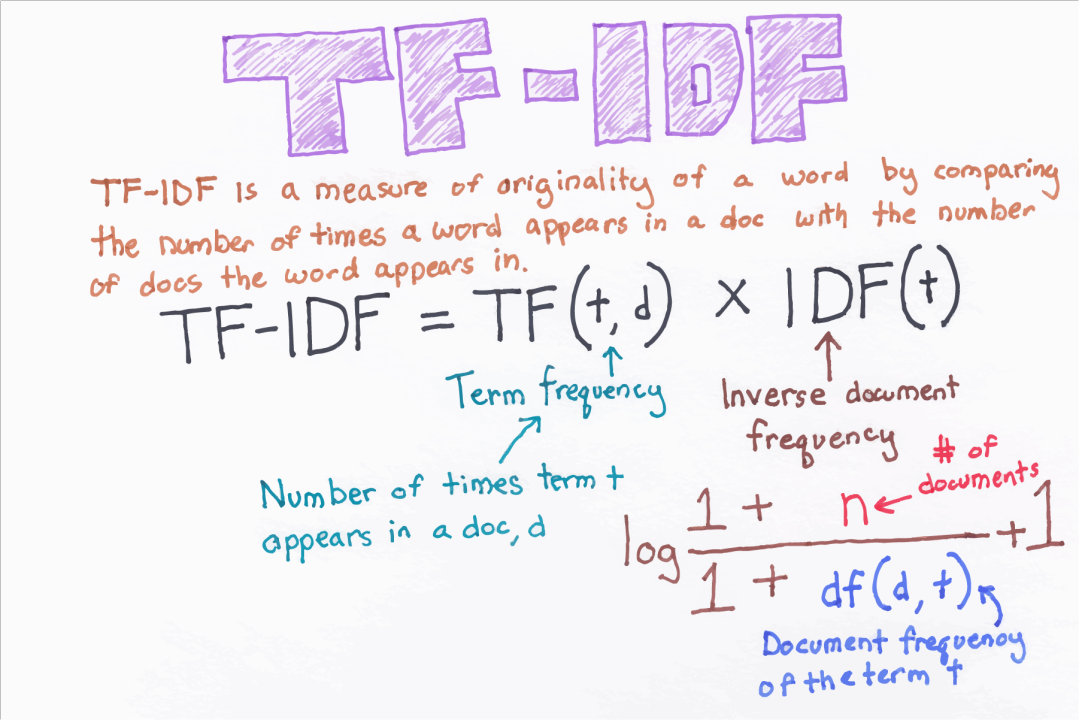

4 -Inverse Document Frequency(IDF):

While computing TF, all terms are considered equally important. However it is known that certain terms, such as “is”, “of”, and “that”, may appear a lot of times but have little importance. Thus we need to weigh down the frequent terms while scale up the rare ones, by computing IDF, an inverse document frequency factor is incorporated which diminishes the weight of terms that occur very frequently in the document set and increases the weight of terms that occur rarely.

IDF is the inverse of the document frequency which measures the informativeness of term t. When we calculate IDF, it will be very low for the most occurring words such as stop words (because stop words such as “is” is present in almost all of the documents, and N/df will give a very low value to that word). This finally gives what we want, a relative weightage.

idf(t) = N/df

Now there are few other problems with the IDF , in case of a large corpus,say 100,000,000 , the IDF value explodes , to avoid the effect we take the log of idf .

During the query time, when a word which is not in vocab occurs, the df will be 0. As we cannot divide by 0, we smoothen the value by adding 1 to the denominator.

that’s the final formula:

Formula :

idf(t) = log(N/(df + 1))

tf-idf now is a the right measure to evaluate how important a word is to a document in a collection or corpus.here are many different variations of TF-IDF but for now let us concentrate on the this basic version.

Formula :

tf-idf(t, d) = tf(t, d) * log(N/(df + 1))

5 -Implementing TF-IDF in Python From Scratch :

To make TF-IDF from scratch in python,let’s imagine those two sentences from diffrent document :

first_sentence : “Data Science is the sexiest job of the 21st century”.

second_sentence : “machine learning is the key for data science”.

First step we have to create the TF function to calculate total word frequency for all documents. Here are the codes below:

first as usual we should import the necessary libraries :

import pandas as pd import sklearn as sk

import math

so let’s load our sentences and combine them together in a single set :

first_sentence = "Data Science is the sexiest job of the 21st century"

second_sentence = "machine learning is the key for data science"

#split so each word have their own string

first_sentence = first_sentence.split(" ")

second_sentence = second_sentence.split(" ")#join them to remove common duplicate words

total= set(first_sentence).union(set(second_sentence))

print(total)

Output :

{'The', 'car', 'driven', 'highway', 'is', 'on', 'road', 'the', 'truck'}

Now lets add a way to count the words using a dictionary key-value pairing for both sentences :

wordDictA = dict.fromkeys(total, 0)

wordDictB = dict.fromkeys(total, 0)

for word in first_sentence:

wordDictA[word]+=1

for word in second_sentence:

wordDictB[word]+=1

Now we put them in a dataframe and then view the result:

pd.DataFrame([wordDictA, wordDictB])

No let’s writing the TF Function :

def computeTF(wordDict, doc):

tfDict = {}

corpusCount = len(doc)

for word, count in wordDict.items():

tfDict[word] = count/float(corpusCount)

return(tfDict)

#running our sentences through the tf function:

tfFirst = computeTF(wordDictA, first_sentence)

tfSecond = computeTF(wordDictB, second_sentence)

#Converting to dataframe for visualization

tf = pd.DataFrame([tfFirst, tfSecond])

and this is the expected output :

That’s all for TF formula , just i wanna talk about stop words that we should eliminate them because they are the most commonly occurring words which don’t give any additional value to the document vector .in-fact removing these will increase computation and space efficiency.

nltk library has a method to download the stopwords, so instead of explicitly mentioning all the stopwords ourselves we can just use the nltk library and iterate over all the words and remove the stop words. There are many efficient ways to do this, but ill just give a simple method.

those a sample of a stopwords in english language :

and this is a simple code to download stop words and removing them .

import nltk

from nltk.corpus import stopwords

set(stopwords.words('english'))

filtered_sentence = [w for w in WordictA if not w in stop_words]

output :

{ 'car', 'driven', 'highway','road','truck'}

And now that we finished the TF section, we move onto the IDF part:

def computeIDF(docList):

idfDict = {}

N = len(docList)

idfDict = dict.fromkeys(docList[0].keys(), 0)

for word, val in idfDict.items():

idfDict[word] = math.log10(N / float(val) + 1)

return(idfDict)

#inputing our sentences in the log file

idfs = computeIDF([wordDictA, wordDictB])

and now we implement the idf formula , let’s finish with calculating the TFIDF

def computeTFIDF(tfBow, idfs):

tfidf = {}

for word, val in tfBow.items():

tfidf[word] = val*idfs[word]

return(tfidf)

#running our two sentences through the IDF:

idfFirst = computeTFIDF(tfFirst, idfs)

idfSecond = computeTFIDF(tfSecond, idfs)

#putting it in a dataframe

idf= pd.DataFrame([idfFirst, idfSecond])

output :

That was a lot of work. But it is handy to know, if you are asked to code TF-IDF from scratch in the future. However, this can be done a lot simpler thanks to sklearn library. Let’s look at the example from them below:

#first step is to import the library from sklearn.feature_extraction.text import TfidfVectorizer #for the sentence, make sure all words are lowercase or you will run #into error. for simplicity, I just made the same sentence all #lowercase firstV= "Data Science is the sexiest job of the 21st century" secondV= "machine learning is the key for data science" #calling the TfidfVectorizer vectorize= TfidfVectorizer() #fitting the model and passing our sentences right away: response= vectorize.fit_transform([firstV, secondV])

and that’s the expected output :

Summary :

In this post we are going to explain how to use python and a natural language processing (NLP) technique known as Term Frequency — Inverse Document Frequency (tf-idf) to summarize documents.

We’ll areusing sklearn along with nltk to accomplish this task.

Remember that you can find the fully working code in my github repository here.

Thanks for reading and I will be glad to discuss any questions or corrections you may have :) Find me on LinkedIn if you want to discuss Machine Learning or anything else.

.