There are lots of reasons why a single AI-generated photo of an “explosion” near the Pentagon was obviously fake. But it was enough to fool many of Twitter’s top OSINT and financial breaking news accounts. A blue-checked “Bloomberg Feed” account, with no connection to Bloomberg itself, helped lend credibility to the fabricated event. Russian accounts were all too excited to jump into the fray, and the stock market began to drop until the Arlington, Va., fire department doused the rumor with a Tweet of its own.

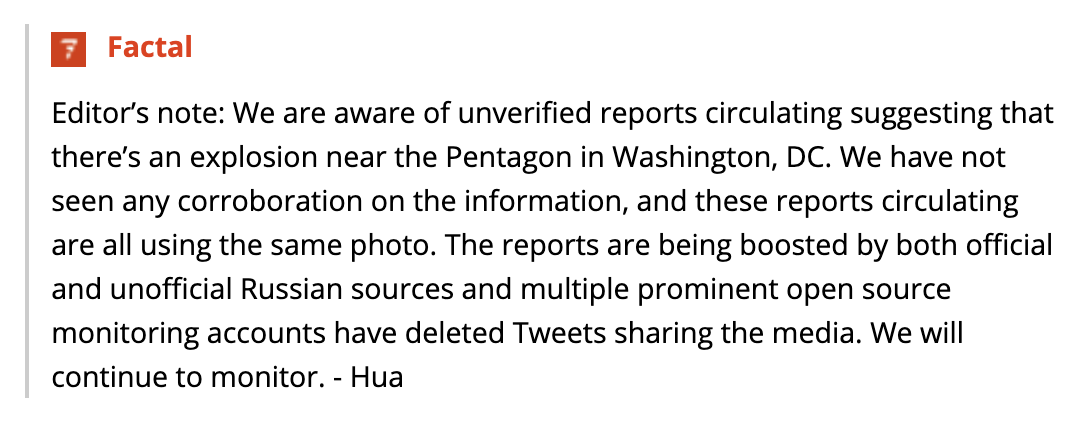

Factal’s own AI technology detected the first reports, and our editors were quick to realize the photo was likely a fake. The image didn’t quite match up with the Pentagon, the sources had inconsistent track records and the rest of the internet was suspiciously quiet about such a major event.

“It’s safe to say if there was any sort of incident near a government building in DC, especially at this magnitude, we would have seen dozens of angles of footage and reports from eyewitnesses,” explained Jillian Stampher, Factal’s head of news. “There were none in this case.”

Factal published this editor’s note (below) and answered our members’ questions in our secure chat:

We followed up a few minutes later with the confirmation from Arlington Fire and EMS. The entire non-event took just over 20 minutes, and the market quickly recovered.

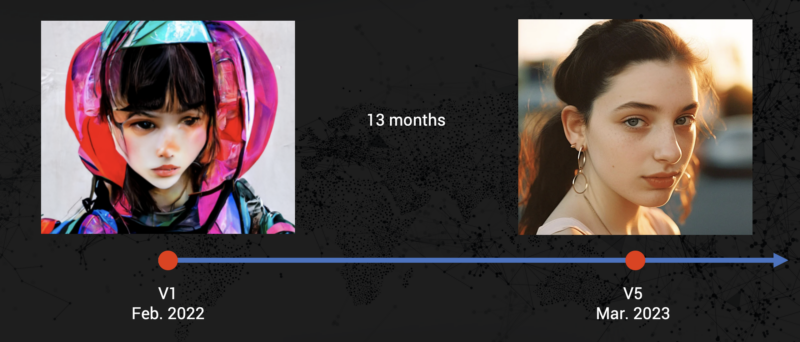

This is just a preview of an unsettling future. If something this rudimentary can dip the market, then imagine a world with hyper-realistic images, audio and video generated on the fly. Generative AI is improving at a breakneck pace; just look at the rate of improvement in research lab Midjourney‘s algorithm across a 13-month period:

While today’s AI videos are jumpy, deformed and obviously fake, they’ll soon look as real as that photo (right). Together with advances in language and voice, generative AI has nearly reached the threshold of becoming indistinguishable from reality. The tech platforms and AI startups are making some progress with safeguards, but the technology is outpacing its own checks and balances and spilling into the open source community. Governments are struggling with a response.

Security and resilience teams are right to be worried about the dark side of generative AI. It will be weaponized to malign reputations, hack systems, dupe news organizations, throw elections and dispute fundamental facts. At the same time, social platforms are fragmenting across a wide spectrum of smaller and more private networks. An obviously-fake explosion at the Pentagon is one thing, but when reality can be inexpensively manufactured at scale, the internet’s ability to “self correct” will fall apart. By the time the record is set straight, the damage is already done.

Navigating this new information landscape will be more challenging than ever. While noise delays reaction time, uncertain information paralyzes teams. If you don’t know what’s really happening, you’re hesitant to respond at all.

Factal wrote the book on real-time verification. With trusted information from Factal in hand, security and resilience teams can respond quickly, confidently and with clarity. We continue to invest in AI and journalism to enable companies to make sense of what’s happening. More than ever, we’re also helping security and resilience teams determine what isn’t happening. As generative AI edges closer to reality, separating fact from fiction will become growing imperative for companies to both protect against harm as well as pursue emerging opportunities.

(Cory Bergman is the co-founder of Factal, co-host of the Global Security Briefing and a longtime journalist.)

Factal gives companies the facts they need in real time to protect people, avoid disruptions and drive automation when the unexpected happens.

Try Factal for free or talk with our sales team (sales@factal.com) for a demo.